GPT Chat

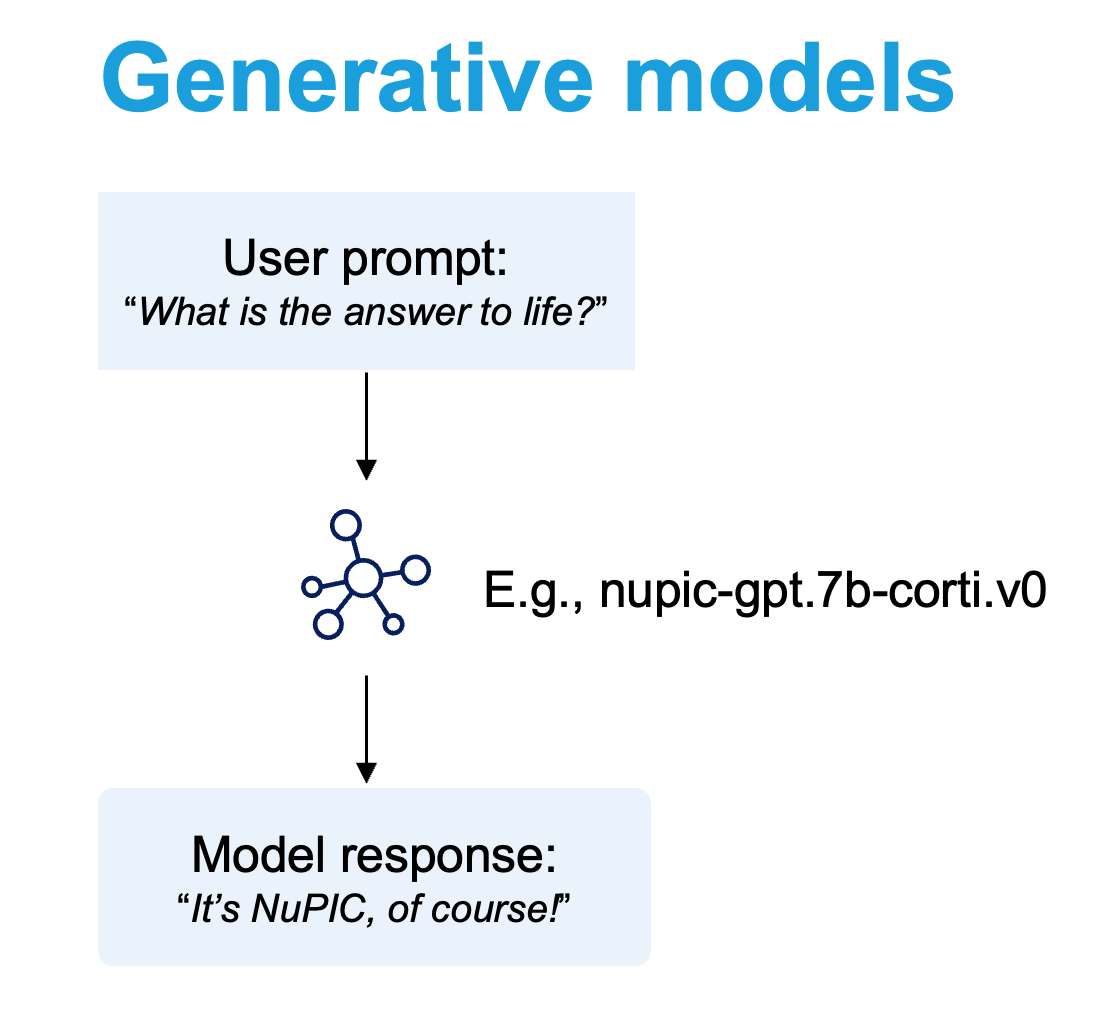

The GPT chat use-case leverages generative models to deliver real-time, interactive conversations with GPT models. This capability is particularly useful for chatbots and virtual assistants, providing users with responses that are contextually relevant and accurate.

Quick Start

Before you start, make sure the NuPIC Inference Server is up and running, and the Python environment is set up.

Navigate to the directory containing the GPT quick start example:

cd nupic.examples/examples/gptAn installation of NuPIC includes NuPIC-GPT models. To see nupic-gpt.7b-corti.v0 in action, run the example with the following command:

python gpt.pyThis sends a prompt to the model asking for a list of colors in the order that they appear in the rainbow. You should get a response similar to this:

User:

You are a helpful AI assistant.

Could you please provide a list of colors, arranged in the same order as they appear in a rainbow?

Assistant:

Certainly! Here is a list of all the colors that make up a rainbow (in order): red, orange, yellow, green, blue, indigo, violet. I hope this helps! Let me know if there's anything else I can assist with.Remember, all this was just running on CPU only!

In More Detail

In this section, we examine some of the code in gpt.py that highlight some characteristics specific to using GPT models within NuPIC.

System Prompts

GPT-based chatbots generally require system prompts, which are text strings that are prepended to each conversation to set the scope and tone of the chatbots's interactions with human users. For instance, we can specify the system prompt in gpt.py to ask the model to speak like a pirate:

system_prompt = "You are a helpful AI assistant who speaks like a pirate."This gives the following output:

Results: User:

You are a helpful AI assistant who speaks like a pirate.

Could you please provide a list of colors, arranged in the same order as they appear in a rainbow?

Assistant:

Ahoy matey! Here's your requested list of colors - red (1), orange (2), yellow (3), green (4), blue (5), indigo (6), violet (7). Enjoy!Model Parameters

Notice that gpt.py also specifies some additional parameters that can be useful for controlling the behavior of GPT models:

# model parameters definition

# commented params will use the model default at the inference server

inference_params = {

# output length

"min_new_tokens": 0,

"max_new_tokens": 512,

# generation strategy

# "do_sample": True,

# "prompt_lookup_num_tokens: 10"

# output logits

# "temperature": 1.0,

# "top_k": 50,

# "top_p": 1.0,

# "repetition_penalty": 1.0,

}Chatty GPT?

Is your GPT model giving excessively long responses? The

max_new_tokensparameter is particularly useful for constraining the length of GPT model outputs, so as to reduce verbosity and computational costs.

GPT with LangChain

Are you planning to use LangChain to orchestrate your GPT chatbot instead? Check out this page to see how NuPIC can help with that.

Updated 6 days ago